🏛️ Summary of AI Court Cases

Purpose: Despite the value of Machine Learning (ML) in video generation, creating Chatbots, protein folding, and more applications, Artificial Intelligence (AI) has hurt many individuals, giving rise to an increasing number of court cases. Ideally, the field of AI can adapt to explain how/when/where models are responsible for negative outcomes, making it possible to evolve model development/deployment practices and account for existing grievances. To understand "how AI can go wrong," I categorize real-world court cases. I then consider where/whether eXplainable AI (XAI) could provide information that is useful in a court of law. This review is limited, and summarizes a single source comprising 201 documented court cases from across the United States, collected between the years 2017 to 2024 (link here).

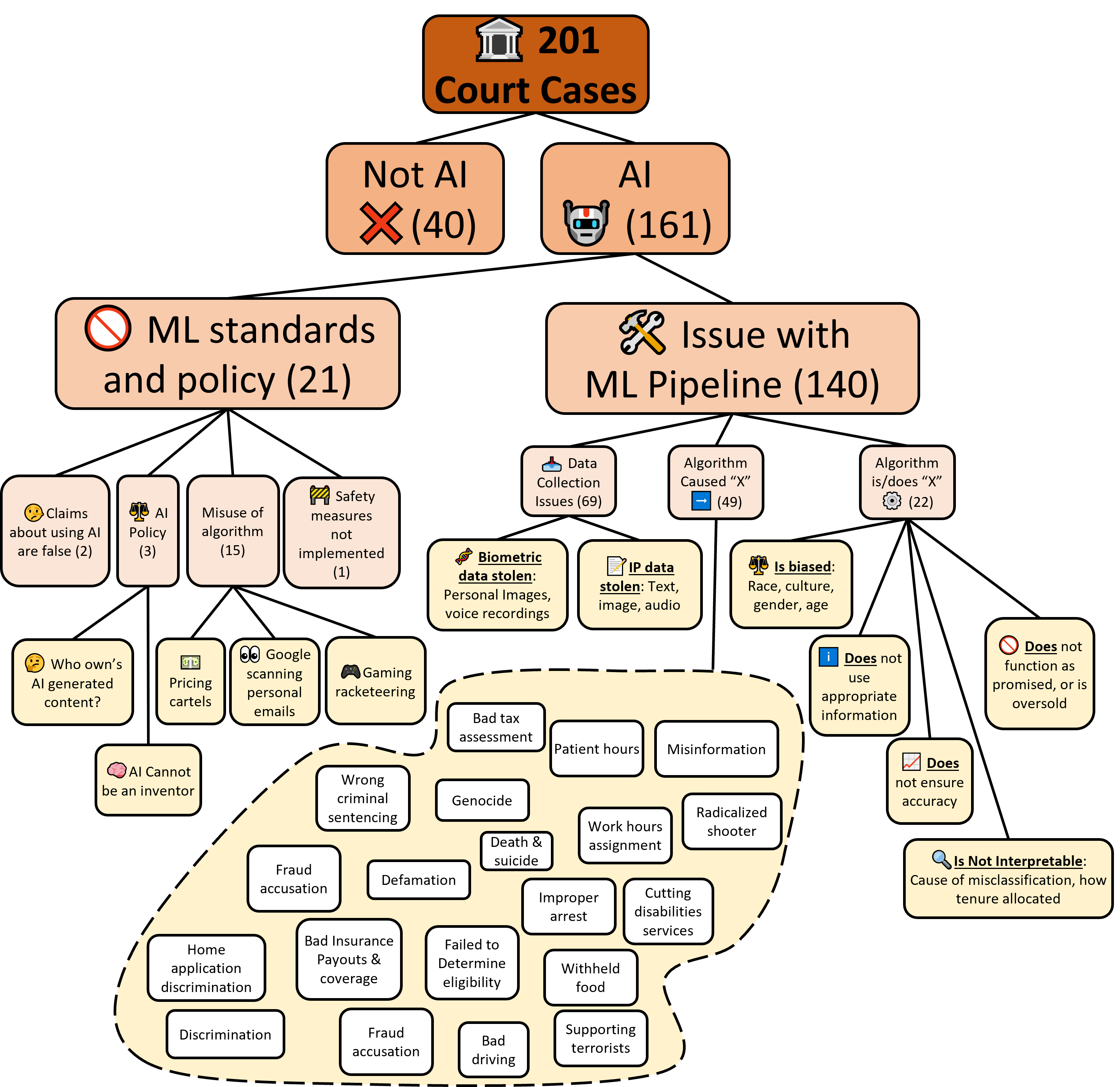

Methodology: I categorized court cases that illuminate potential research directions for eXplainable AI (XAI) by reading through the descriptions, and writing key phrases that group each case (reusing key-words where possible). In review, some of the reported court cases did not explicitly mention AI, so these 41 cases were ignored. Those mentioning AI (160 cases) either described standards and policy issues related to ML (21 cases) or discussed the data collection, model performance, and model impact (140 related to the "ML pipeline"). Some key observations are described below.

Findings: How can the XAI community create tools that are helpful in a court of law? In other words, what information can we present before a court that would help them decide whether someone, some company, or some model is at fault for a given accusation. I do not answer this question, but provide some grounds for continued thought.

XAI seems to be most useful in court cases related to (1) understanding how/whether sensitive data was collected and used to train a model, (2) verifying certain model properties, and (3) evaluating whether a model caused a given event. These topics are described briefly below:

- Data Collection: The majority of court cases discussing model data address whether private or copyright data was collected and used to train a model. Some cases may be resolved by determining whether private/biometric/copyright data exists in a dataset. In other cases, datasets may be manipulated to conceal Intellectual Property (IP) that was used to train a model. In these cases, XAI methods could (1) produce evidence of dataset manipulation, or (2) predict the probability of certain IP being in a dataset given a certain model output.

- Model is "X": Some cases debate whether a model has certain properties, including claims about model accuracy, interpretability, functionality, or biases. In these cases, XAI methods could provide evidence describing whether models have certain properties.

- Model caused "X": In other cases, plaintiffs argue that a model has negatively impacted either individuals or groups of people. Cases argue that models caused autonomous vehicles to crash, airlines to provide misinformation, unfair or inadequate hours being assigned to staff, and so on. While XAI could help differentiate between cases where a model cannot/can/did cause certain outputs (which again studies model behaviors), it seems challenging to explain whether a model "caused" or should be responsible for negative use and impact. If an autonomous vehicle algorithm did not stop a car from crashing, resulting in a driver's death, was the model at fault for being poorly trained, or the driver for not taking control? In these circumstances, there may be value in explaining whether a model's behavior meets certain behavioral or safety requirements.

There are certainly more uses for XAI in a court of law, and there are many challenges in providing useful information in these scenarios. However, I hope this discussion helps interested readers summarize challenges of deploying AI in practice, and consider whether developments in XAI could be valuable in a courtroom when AI goes wrong.

(Wormald 11.02.2024)