Hardware Acceleration of Machine Learning

Purpose: What if we could increase machine learning inference speeds by 10-100X? What if you don't need massive supercomputers, and can accelerate machine learning on your lab bench? That's the purpose of this work.

Background: At the time of writing, my dissertation research focuses on hardware acceleration of machine learning systems. This approach compiles machine learning models to a Hardware Description Language (HDL) like Verilog which can be loaded to a Feild Programmable Gate Array (FPGA). Some machine learning algorithms can reduce the number of clock cycles needed to make a prediction. Partial inspiration for this work came from a hackathon with several of my mentees, where we won most popular project!

Summary: Here's the software and project details, though I won't make you leave this page for a summary. In short, we implemented a classification algorithm on the DE10-Lite FPGA which can identify images in the MNIST dataset with 93% accuracy. The system processes images in real-time and displays the appropriate class labels on the LED interface. A video demonstration of this classification capability can be seen here.

(I apologize for the low video quality - this was recorded on veryyyyy low sleep about 15 minutes before the project submission was complete. I love hackathon timelines.)

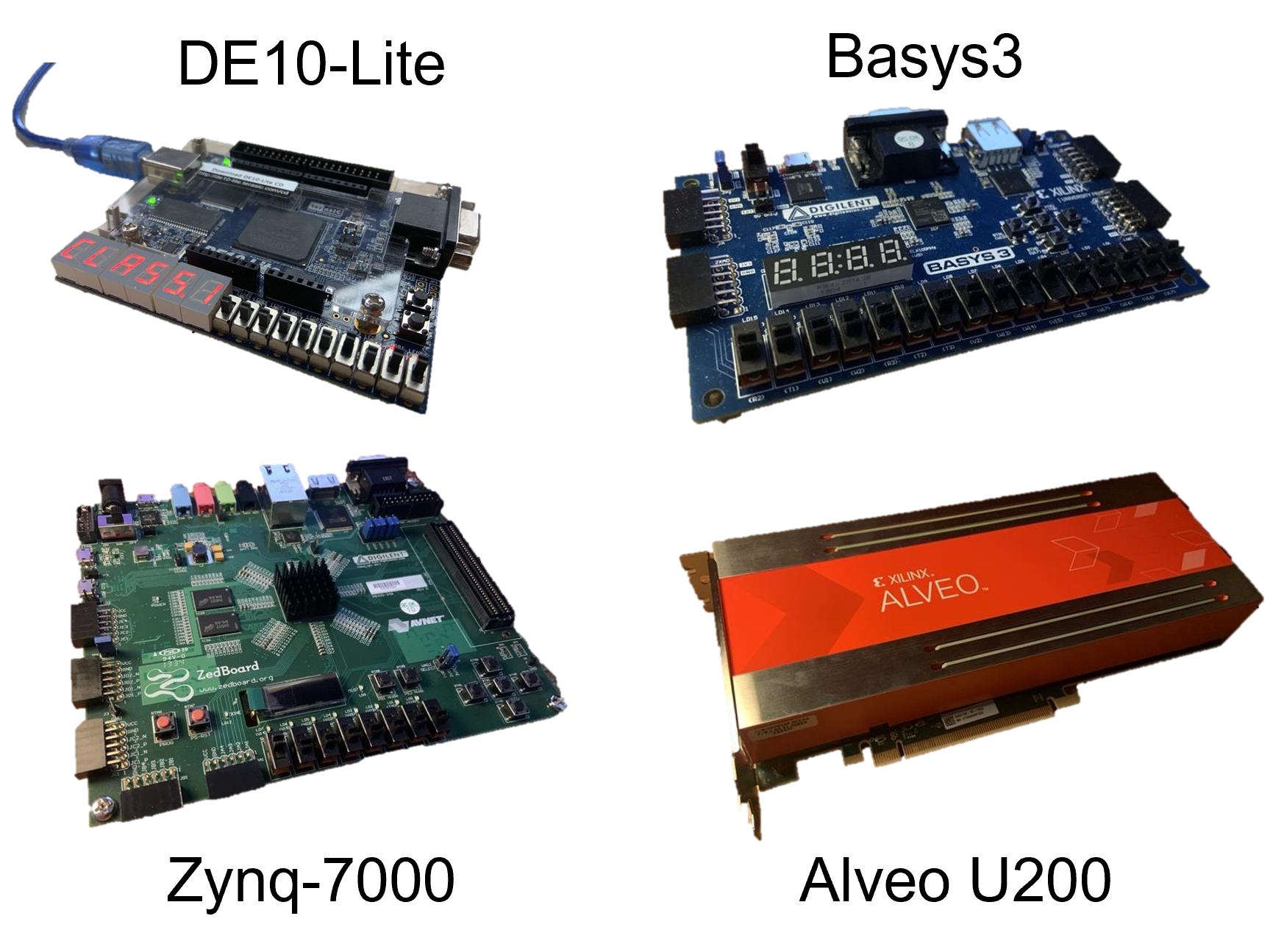

Right now, I'm focusing on determining what model sizes can be run across different sizes of FPGAs. A lot of my work involves the Alveo U200, which is similar to a graphics card but that you can program with the appropriate interface... (see PYNQ here). Here are a couple devices that you could look into if you're interested, though the smaller devices can only run limited machine learning model sizes so be careful what you decide on. If you want to learn more about the model sizes that can be run on these devices, stay in touch! I'll be publishing on this soon.

- DE10-Lite: Smaller device, cheap, good for initial tests, based on INTEL hardware architectures and programmed using Quartus

- Basys3: Smaller device, cheap, good for initial tests, based on AMD hardware architectures and programmed with Vivado design suite

- ZedBoard Zynq-7000: Good medium-sized device, slightly more expensive, many IO types, in AMD and programmed with Vivado design suite

- Alveo U200: Large format device, more challenging to get started has a TON of memory. Much more expensive, PCI or fiber-optics IO, based on AMD hardware architectures and programmed using Vivado and Vitis

Figure 2: Some FPGA boards where I've gotten machine learning models running

Running machine learning algorithms on FPGAs is relevant to all sorts of problems in the medical domain, AI, biotechnology, national security.... Really anywhere you want real-time predictions from machine learning models, especially if you're interested in integrating FPGAs into control systems. My personal inspiration is to apply FPGA-based control systems to microfluidics.... more to come later!